A vector database is specialized for storing high-dimensional vectors and for efficiently performing queries on them. Rather than searching on exact values or text-based queries, vector databases let you search based on semantic similarity. For instance, in a text embedding scenario, you can find documents that are semantically similar to a given query, even if they do not share the same keywords.

This allows for usage in areas such as:

With SurrealDB’s SurrealQL you can define vector fields, store numeric arrays as embeddings, create indexes, and perform similarity queries. SurrealDB’s approach unifies these features to allow you to move from multiple data stores (a dedicated vector database plus separate document database and finally a graph database to stitch them together) to a single source of truth.

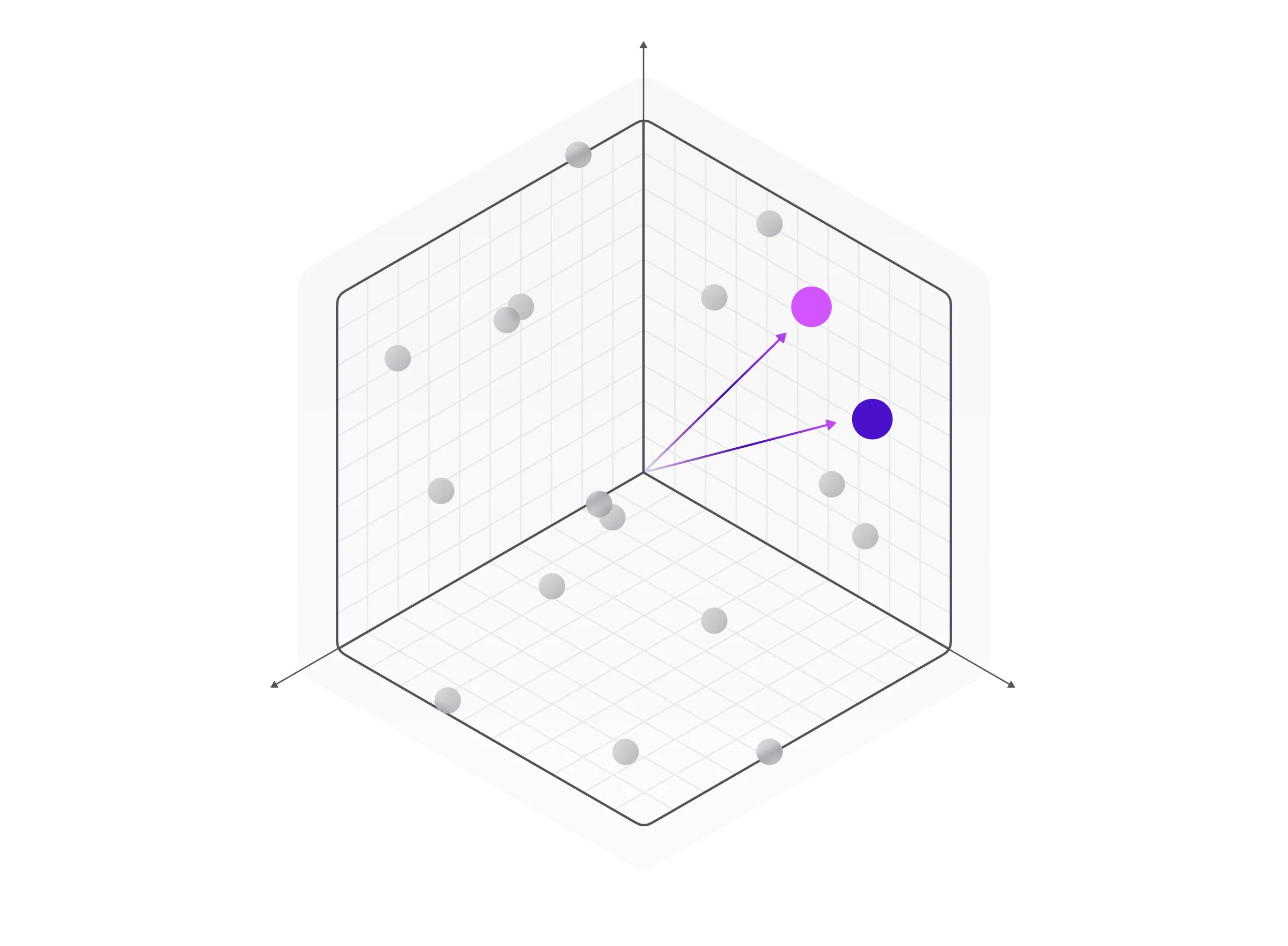

But how do you “think” in a vector database? Unlike relational or document models, where the focus is on well-defined schemas and relationships, vector databases revolve around embeddings—numerical representations of objects (like text, images, audio snippets, etc.) in a continuous vector space. This allows you to design data structures and queries to exploit these embeddings for similarity search or AI-driven retrieval.

Vector search is a search mechanism that goes beyond traditional keyword matching and text-based search methods to capture deeper characteristics and similarities between data.

Vector search isn’t new to the world of data science. Gerard Salton, known as the Father of Information Retrieval, introduced the Vector Space Model, cosine similarity, and TF-IDF for information retrieval around 1960.

It converts data such as text, images, or sounds into numerical vectors, called vector embeddings. You can think of vector embeddings as cells. In the same way that cells form the basic structural and biological unit of all known living organisms, vector embeddings serve as the basic units of data representation in vector search.

In practice, embeddings are typically dense vectors of real numbers that capture the semantic or contextual meaning of data. For instance, in Natural Language Processing (NLP), a word or sentence can be transformed into a vector of length 128, 256, 768, or even more dimensions. The idea is that similar objects (in meaning) end up having similar vector representations, making it possible to compute how close they are in the vector space.

Embeddings themselves are not generated by the database. Instead, they depend on which model is used to generate them. Various companies such as OpenAI and Mistral have both free and paid models, while many other free models exist to generate embeddings.

Inside a database they will be stored in this sort of manner.

To store vectors in SurrealDB, you typically define a field within your data schema dedicated to holding the vector data. These vectors represent space data points and can be used for various applications, from recommendation systems to image recognition. Below is an example of how to create records with vector embeddings:

There are no strict rules or limitations regarding the length of the embeddings, and they can be as large as needed. Just keep in mind that larger embeddings lead to more data to process and that can affect performance and query times based on your physical hardware.

In fact, embeddings retrieved from a model can be cut down to any length you prefer if the accuracy is still acceptable for your use case.

SurrealDB supports full-text search and Vector Search. Full-text search (FTS) involves indexing documents using an FTS index that makes use of an analyzer that breaks down text using tokenizers and filters.

The image above is a Google search for the word “lead”, a word with more than one definition (and pronunciation!). Lead can mean ‘taking initiative’, as well as the chemical element with the symbol ‘Pb’.

Let’s consider this in the context of a database of liquid samples which note down harmful chemicals that are found in them.

In the example below, we have a table called liquids with a sample field and a content field. Next, we can doefine a full-text index on the content field by first defining an analyzer called liquid_analyzer. We can then define an index on the content field in the liquid table and set our custom analyzer (liquid_analyzer)to search through the index.

Then, using the select statement to retrieve all the samples containing the chemical lead will also bring up samples that mention the word lead.

If you read through the content of the tap water sample, you’ll notice that it does not contain any lead in it but it has the mention of the word lead under “The team lead by Dr. Rose…” which means that the team was guided by Dr. Rose.

The search pulled up both the records although the tap water sample had no lead in it. This example shows us that while full-text search does a great job at matching query terms with indexed documents, on its own it may not be the best solution for use cases where the query terms have deeper context and scope for ambiguity.

The vector search feature of SurrealDB will help you do more and dig deeper into your data. This can be used in place of, or together with full-text search.

For example, still using the same liquids table, you can store the chemical composition of the liquid samples in a vector format.

Notice that we have added an embedding field to the table. This field will store the vector embeddings of the content field so we can perform vector searches on it.

In the example above you can see that the results are more accurate. The search pulled up only the results in which the word “lead” was used to mean the material, while the final liquidsVector record had the lowest score. This is the advantage of using vector search over full-text search.

Another use case for vector search is in the field of facial recognition. For example, if you wanted to search for an actor or actress who looked like you from an extensive dataset of movie artists, you would first use an LLM model to convert the artist’s images and details into vector embeddings and then use SurrealQL to find the artist with the most resemblance to your face vector embeddings. The more characteristics you decide to include in your vector embeddings, the higher the dimensionality of your vector will be, potentially improving the accuracy of the matches but also increasing the complexity of the vector search.

SurrealDB provides vector functions for most of the major numerical computations done on vectors. They include functions for element-wise addition, division and even normalisation.

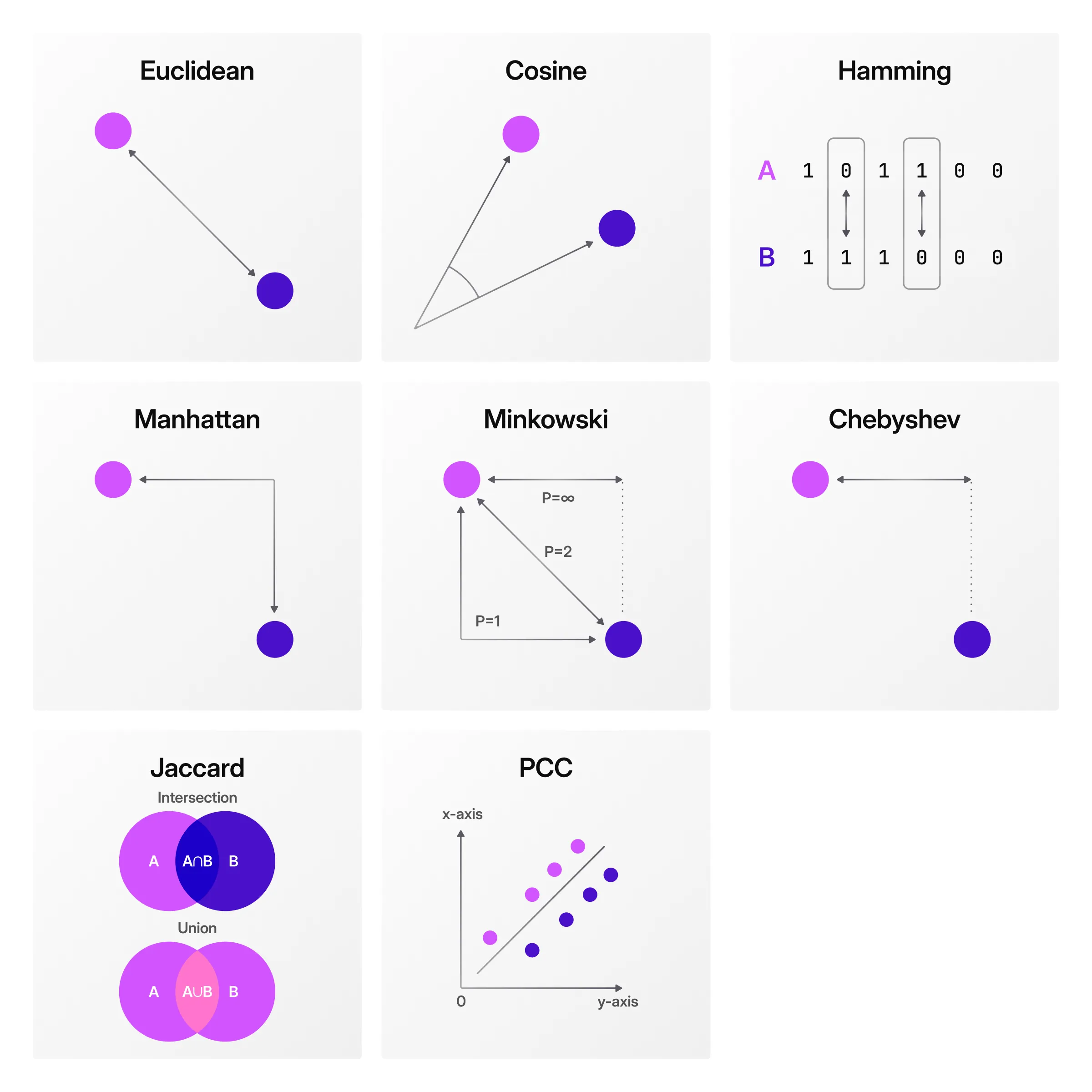

They also include similarity and distance functions, which help in understanding how similar or dissimilar two vectors are. Usually, the vector with the smallest distance or the largest cosine similarity value (closest to 1) is deemed the most similar to the item you are trying to search for.

The choice of distance or similarity function depends on the nature of your data and the specific requirements of your application.

In the liquids examples, we assumed that the embeddings represented the harmfulness of lead (as a substance). We used the vector::similarity::cosine function because cosine similarity is typically preferred when absolute distances are less important, but proportions and direction matter more.

When it comes to search, you can always use brute force.

In SurrealDB, you can use the brute force approach to search through your vector embeddings and data.

Brute force search compares a query vector against all vectors in the dataset to find the closest match. As this is a brute-force approach, you do not create an index for this approach.

The brute force approach for finding the nearest neighbour is generally preferred in the following use cases:

While brute force can give you exact results, it’s computationally expensive for large datasets.

In most cases, you do not need a 100% exact match, and you can give it up for faster, high-dimensional searches to find the approximate nearest neighbour to a query vector.

This is where Vector indexes come in.

In SurrealDB, you can perform a vector search using a Hierarchical Navigable Small World (HNSW) index, a state-of-the-art algorithm for approximate nearest neighbour search in high-dimensional spaces. It is a proximity graph-based index that offers a balance between search efficiency and accuracy.

By design, HNSW currently operates as an “in-memory” structure. Introducing persistence to this feature, while beneficial for retaining index states, is an ongoing area of development. Our goal is to balance the speed of data ingestion with the advantages of persistence.

You can also use the REBUILD statement, which allows for the manual rebuilding of indexes as needed. This approach ensures that while we explore persistence options, we maintain the optimal performance that users expect from HNSW, providing flexibility and control over the indexing process.

The vector::distance::knn() function from SurrealDB returns the distance computed between vectors by the KNN operator. This operator can be used to avoid recomputation of the distance in every select query.

Consider a scenario where you’re searching for actors who look like you but they should have won an Oscar. You set a flag, which is true for actors who’ve won the golden trophy.

Let’s create a dataset of actors and define an HNSW index on the embeddings field.

actor:1 and actor:2 have the closest resemblance with your query vector and also have won an Oscar.

As mentioned above, full-text search and vector search can both be used in SurrealDB. In addition, some functions exist inside the search:: that take both full-text and vector arguments in order to produce a single unified output.

Here is an example of one of them called search::rrf() which does this using an algorithm called reciprocal rank fusion.

| Parameter | Default | Options | Description |

|---|---|---|---|

| DIMENSION | Size of the vector | ||

| DIST | EUCLIDEAN | EUCLIDEAN, COSINE, MANHATTAN | Distance function |

| TYPE | F64 | F64, F32, I64, I32, I16 | Vector type |

| EFC | 150 | EF construction | |

| M | 12 | Max connections per element | |

| M0 | 24 | Max connections in the lowest layer | |

| LM | 0.40242960438184466f | Multiplier for level generation. This value is automatically calculated with a value considered as optimal. |

Examples:

For more details, see the DEFINE INDEX statement documentation.

| SELECT Functions | |

|---|---|

vector::distance::knn() | reuses the value computed during the query |

vector::distance::chebyshev(point, $vector) | |

vector::distance::euclidean(point, $vector) | |

vector::distance::hamming(point, $vector) | |

vector::distance::manhattan(point, $vector) | |

vector::distance::minkowski(point, $vector, 3) | third param is 𝑝 |

vector::similarity::cosine(point, $vector) | |

vector::similarity::jaccard(point, $vector) | |

vector::similarity::pearson(point, $vector) |

WHERE statement

| Query | HNSW index |

|---|---|

<|2|> | uses distance function defined in index |

<|2, EUCLIDEAN|> | brute force methood |

<|2, COSINE|> | brute force method |

<|2, MANHATTAN|> | brute force method |

<|2, MINKOWSKI, 3|> | brute force method (third param is 𝑝) |

<|2, CHEBYSHEV|> | brute force method |

<|2, HAMMING|> | brute force method |

<|2, 10|> | second param is effort* |

*effort: Tells HNSW how far to go in trying to find the exact response. HNSW is approximate, and may miss some vectors.

EXPLAIN FULL clause. E.g: SELECT id FROM pts WHERE point <|10|> [2,3,4,5] EXPLAIN FULL;Vector search does not need to be complicated and overwhelming. Once you have your embeddings available, you can try out different vector functions in combination with your query vector to see what works best for your use case. As discussed in the reference guide, you have 3 options to perform Vector Search in SurrealDB. Based on the complexity of your data and accuracy expectations, you can choose between them. You can design your select statements to query your search results along with filters and conditions. In order to avoid recalculation of the KNN distance for every single query, you also have the vector::distance::knn().

Due to GenAI, most applications today deal with intricate data with layered meanings and characteristics. Vector search plays a big role in analyzing such data to find what you’re looking for or to make informed decisions.

You can start using vector search in SurrealDB by installing SurrealDB on your machines or by using Surrealist. And if you’re looking for a quick video explaining Vector Search, check out our YouTube channel.

If you’re interested in understanding Vector search in depth, checkout this academic paper on Vector Retrieval written by Sebastian Bruch.