Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that eliminates the need to install, operate, and maintain your own Kubernetes control plane on Amazon Web Services (AWS). This deployment guide covers setting up a highly available SurrealDB cluster backed by TiKV on Amazon EKS.

kubectl To manage the Kubernetes cluster.eksctl installedCOST CONSIDERATIONSProvisioning the environment in your AWS account will create resources and there will be cost associated with them. The cleanup section provides a guide to remove them, preventing further charges.

ImportantThis guide was tested in

eu-west-1(Ireland region) and it follows TiKV best practices for scalability and high availability. It will provision up to 12 Amazon Elastic Compute Cloud (Amazon EC2) instances, several Amazon Elastic Block Storage (Amazon EBS) drives, and up to three Amazon Elastic Loadbalancers (Amazon ELB). The forecasted cost to run this guide is $5 USD per hour.

This section outlines how to build a cluster by using the eksctl tool. The following is the configuration that will be used to build the cluster:

Based on this configuration eksctl will:

Save the above configuration in a file named surrealdb-cluster.yml and apply the configuration file like so:

NoteThe deployment of the cluster should take about 30 minutes.

The following instructions will install TiKV operators in your EKS cluster.

The following instructions will install the AWS Load Balancer Controller.

The AWS Load Balancer Controller provisions and manages the necessary AWS resources when Kubernetes creates an Ingress or a LoadBalancer

Formerly known as AWS ALB Ingress Controller, it’s an open-source project on GitHub.

The following script will install SurrealDB on your EKS cluster backed by TiKV with a public endpoint exposed via an Application Load Balancer (ALB).

You can get the endpoint to use with your SurrealDB client as follows:

Test your connection with the following command:

Cleanup can be performed with the following commands.

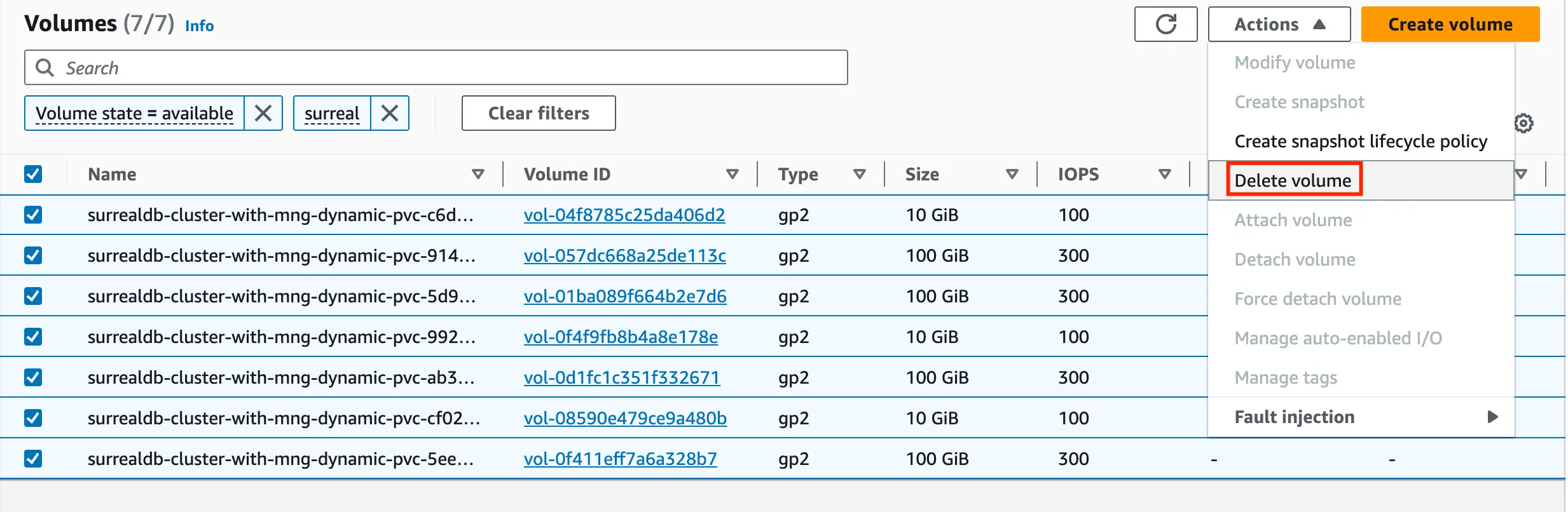

The default cleanup behaviour is to preserve resources such as EBS volumes that were previously attached to your cluster. If this is not what you want, and in order to prevent you from incurring in additional charges related to the usage of these block storage devices, navigate to the AWS console and manually delete all volumes that were attached to your cluster, as shown in figure.